Is artificial intelligence more like science or philosophy?

Science and philosophy both strive to increase our knowledge and understanding. But they deal with different types of questions. Science deals with questions that have an agreed-upon systematic method for answering those questions. Conversely, philosophy deals with questions that currently lack a systematic method for answering those questions. However, when a certain amount of progress is made on a philosophical topic, that topic can sometimes shed the label of philosophy and adopt the label of science. This happens when philosophical work is adopted by other well-established disciplines, or when philosophical work develops enough such that we are more confident in calling it a science. An example of this evolution from philosophy to science is illustrated by the fact that scientists used to be called "natural philosophers".

In this respect, artificial intelligence is more like philosophy than science. In the history of computer science, the label "artificial intelligence" has been used to refer to various technical projects. However, once those projects became well understood, they shed the label "AI" and adopted new labels. Examples include object-oriented programming, speech recognition, robot motion planning, facial recognition and other vision processing algorithms used in photography, industrial robotics, security, and cinema. (Examples from Jeffrey Bradshaw). The popular media still refer to some of these technologies as "AI", but the label of AI has ceased to be useful for professionals working in those fields.

This phenomenon has been called the "AI effect". The AI effect is not new. In 1979, Douglas Hofstadter wrote,

"It is interesting that nowadays, practically no one feels that sense of awe any longer — even when computers perform operations that are incredibly more sophisticated than those which sent thrills down spines in the early days. The once-exciting phrase “Giant Electronic Brain” remains only as a sort of “camp” cliché, a ridiculous vestige of the era of Flash Gordon and Buck Rogers. It is a bit sad that we become blasé so quickly. There is a related “Theorem” about progress in AI: once some mental function is programmed, people soon cease to consider it as an essential ingredient of “real thinking.” The ineluctable core of intelligence is always in that next thing which hasn’t yet been programmed. This “Theorem” was first proposed to me by Larry Tesler, so I call it Tesler’s Theorem: “AI is whatever hasn’t been done yet.”

The technology writer Kevin Kelly, author of The Inevitable, echoed this sentiment: “What we can do now would be A.I. fifty years ago. What we can do in fifty years will not be called A.I.”

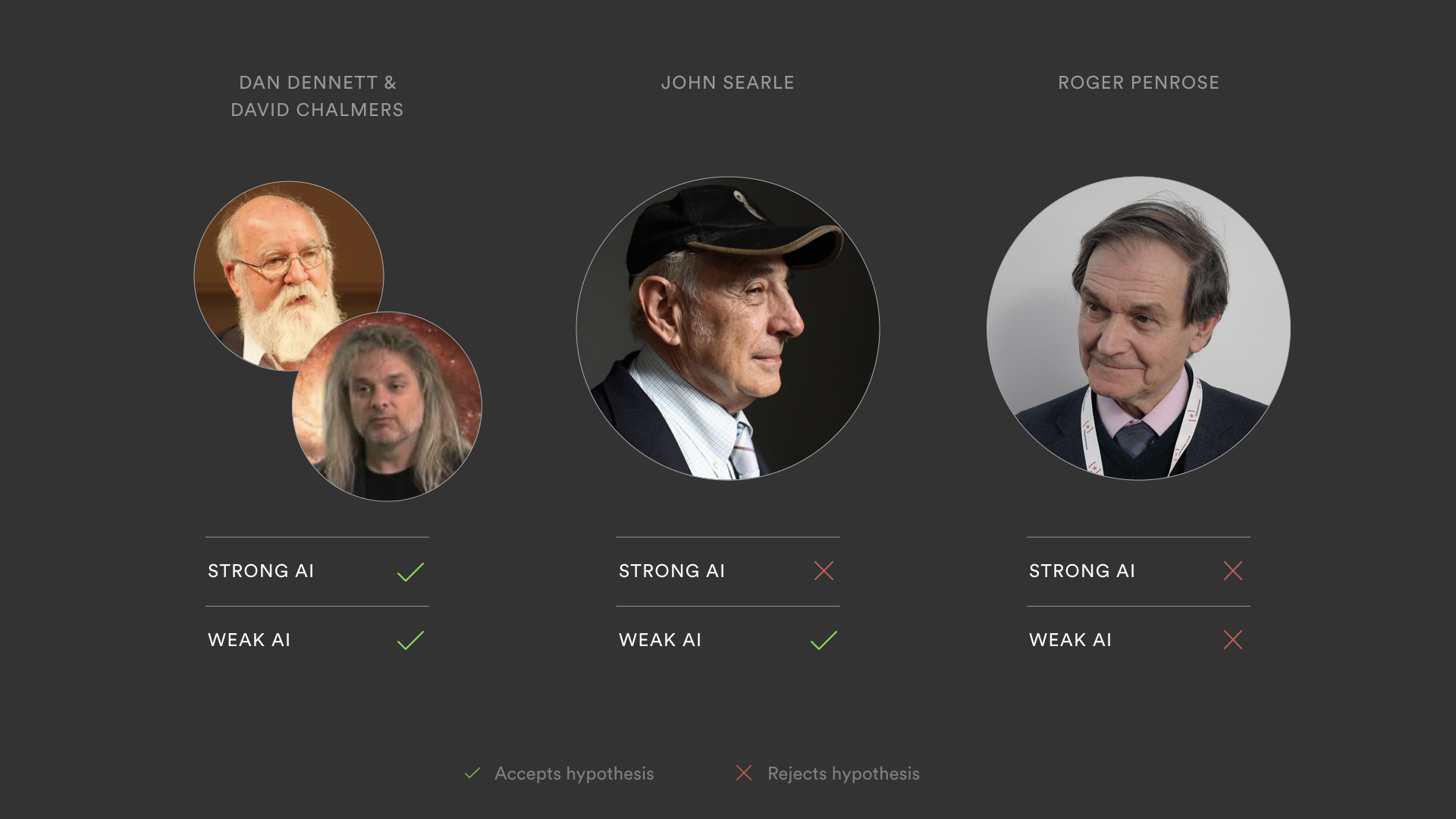

AI is more philosophy than science in another way. In both philosophy and artificial intelligence, there is a lack of expert agreement. According to the philosopher John Searle, "The fact that philosophical questions tend to be those for which there is no generally accepted procedure of solution also explains why there is no agreed-upon body of expert opinion in philosophy." The lack of expert opinion on issues related to AI is illustrated by public comments from tech leaders on the dangers and risks of AI.

In one camp, you have those that are so optimistic about our progress in AI that they are pessimistic about what it can become. These include notable figures such as Elon Musk, Bill Gates, and Stephen Hawking. Musk said, "With artificial intelligence, we are summoning the demon," and AI is “our biggest existential threat…The risk of something seriously dangerous happening is in the five year timeframe. 10 years at most.” Bill Gates chimed in saying, "I agree with Elon Musk and some others on this and don't understand why some people are not concerned." The late Stephen Hawking said, "The development of full artificial intelligence could spell the end of the human race... It would take off on its own, and re-design itself at an ever-increasing rate. Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded."

On the other side of this debate, there is Andrew Ng, one of the most respected AI experts in the world who said, "There's also a lot of hype, that AI will create evil robots with super intelligence. That's an unnecessary distraction." He also said that worrying about evil AIs is like worrying about overpopulation on mars." AI and robotics pioneer Rodney Brooks questioned the credentials of those in the first camp: "I think it's very easy for people who are not deep in the technology itself to make generalizations, which may be a little dangerous. And we've certainly seen that recently with Elon Musk, Bill Gates, Stephen Hawking, all saying AI is just taking off and it's going to take over the world very quickly. And the thing that they share is none of them work in this technological field." Mark Zuckerberg responding to people in the first camp called their doomsday scenarios "pretty irresponsible.”

There currently is no consensus for how to resolve the above disagreements in a systematic way. This means that we need to be even more careful in discerning when these public figures are speaking as scientists and credentialed technologists, and when they are just sharing their opinions for which they have little or no credentials. Examining the philosophical premises underlying claims about AI should empower us to question if these public intellectuals have the relevant philosophical expertise to discuss these issues responsibly. I am sure Stephen Hawking was brilliant as an expert on black holes. Elon Musk sure seems like a genius when it comes to starting tech companies. And, Bill Gates' wealth reveals his business and software expertise. However, their respective areas of expertise do not automatically translate into insightful or authoritative opinions on AI.

While artificial intelligence may be conflated with science, it has often caused more confusion than clarity. Michael I. Jordan—computer science professor at UC Berkeley—recently wrote, “this is not the classical case of the public not understanding the scientists — here the scientists are often as befuddled as the public.” This is because questionable philosophical assumptions hitchhike on the underbelly of scientific progress. According to the AI historian Margaret Boden, "some computer scientists deliberately reject [the] label 'AI'" because the label contains too much philosophical baggage. Even John McCarthy, who coined the term "Artificial Intelligence" in 1955 said that he regretted the term and wished that he had chosen something else.

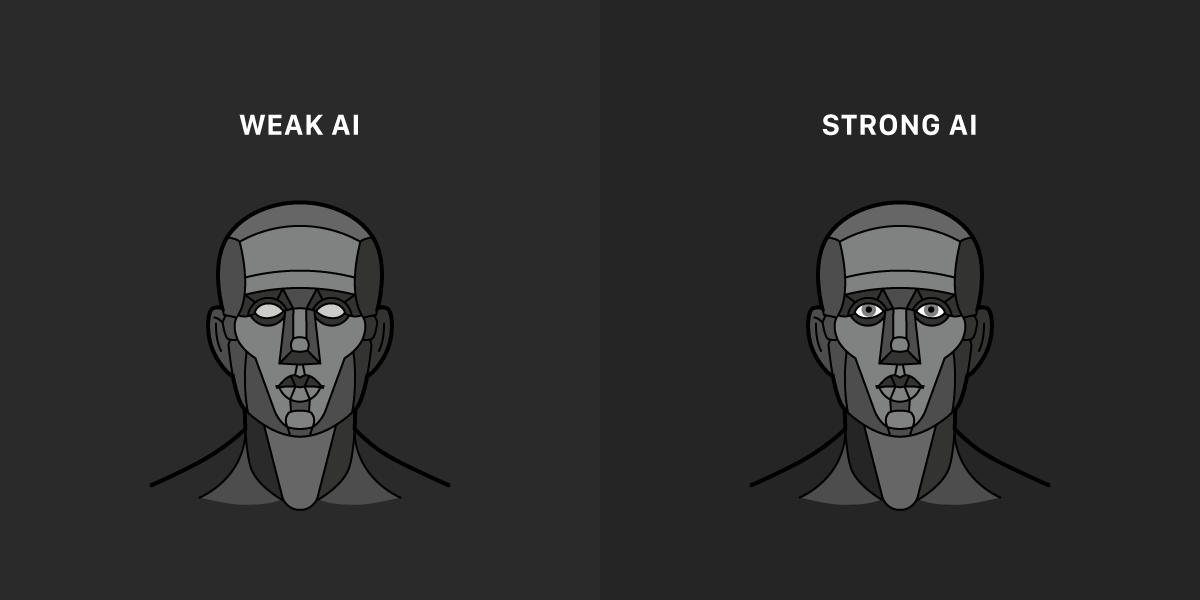

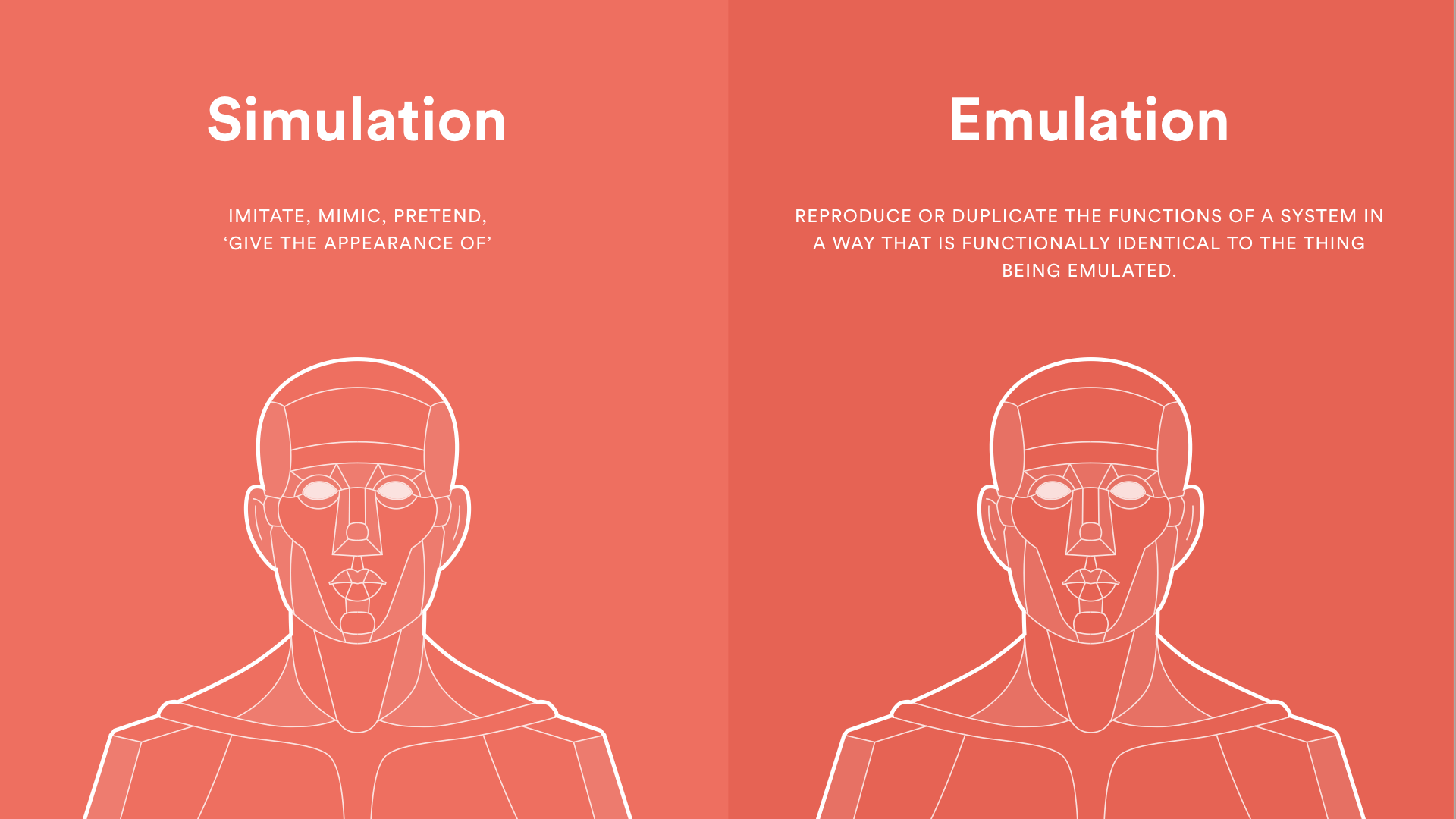

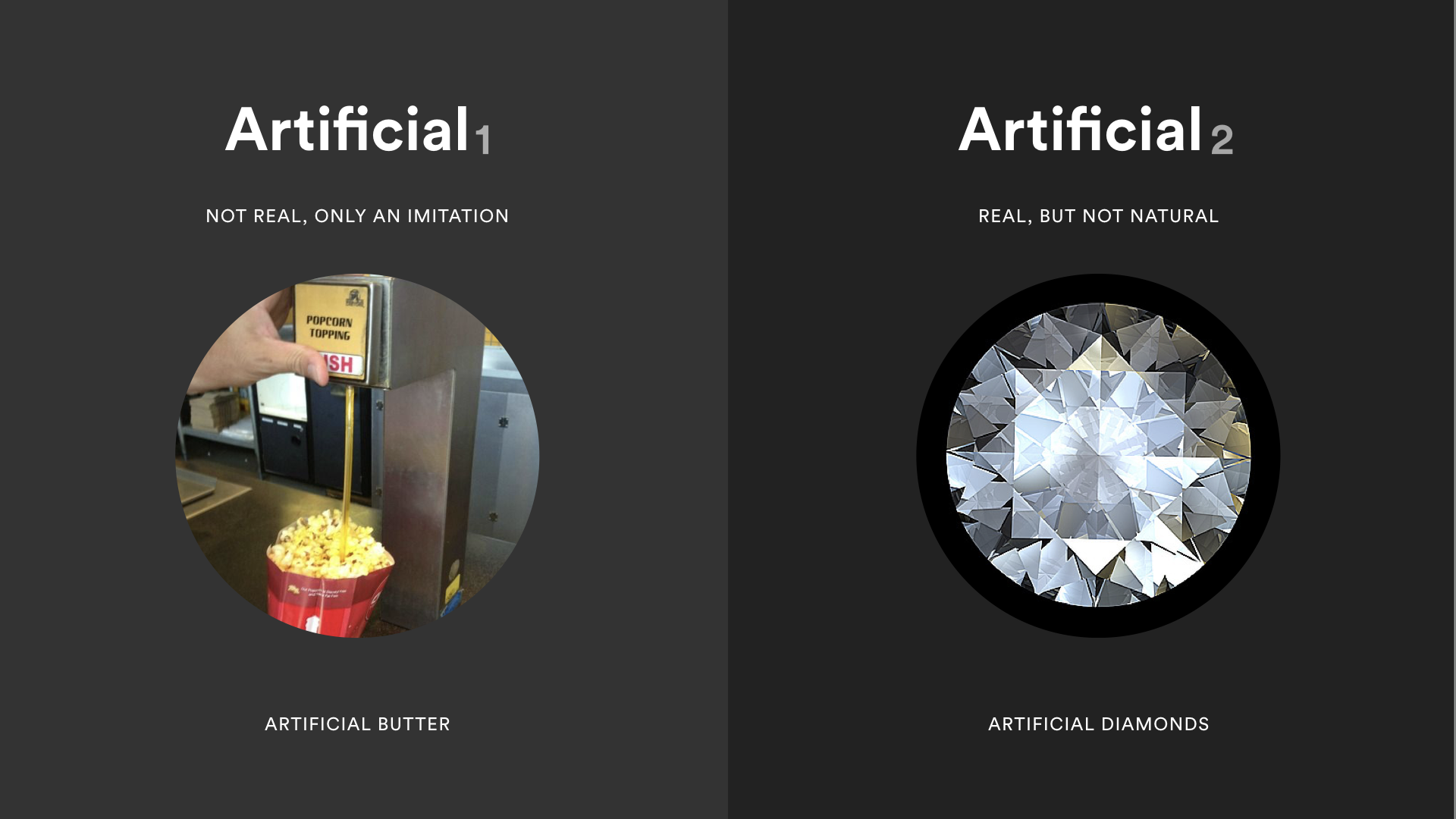

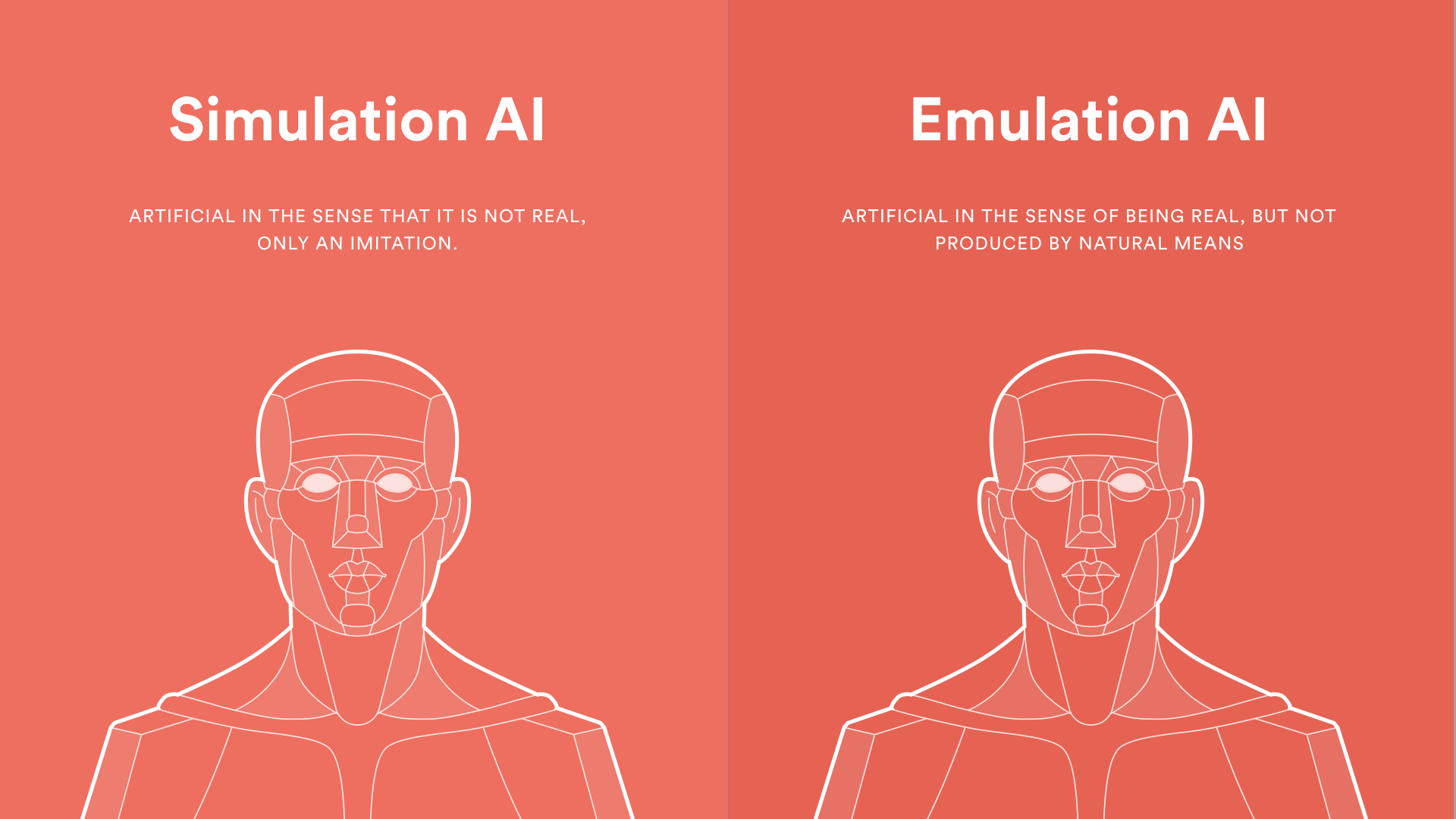

Just because a question is more philosophical than scientific, it doesn't mean we can't make progress. It just means that we have to be even more precise and rigorous in our analysis. Improving our analysis means making our philosophical premises more explicit. It means breaking apart concepts that are often lumped together but are better understood separately. It means being extra clear about the terms we use and how we use them. It can even mean abandoning terms that create more confusion than clarity. Applying these techniques to the analysis of AI mean separating the engineering and the cognitive approaches to AI. It means agreeing on clear definitions for weak vs strong AI and questioning existing distinctions such as narrow vs general AI. Perhaps it even means joining the computer scientists that reject the label 'AI' given that the label creates more confusion than clarity. As AI hype dies down, more machine learning professionals are spurning the phrase. One machine learning friend of mine recently remarked rather frankly , "The term artificial intelligence is stupid." Perhaps like we are stuck with the label until the next AI winter, but shedding it for good may help advance both clear philosophy and sound science.